-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD Radeon RX 9070 expected to start at $479, benchmarks now released (UP: RX 9000 series reveal on Feb 28; hits shelves in early March)

- Thread starter DoubleClutch

- Start date

64gigabyteram

Reverse groomer.

my problem is their coolers- they're ugly compared to the reference model and always so large while having the gall to ask more money. Nvidia shrunk back down to 2 slot coolers with the 50 series and yet the vast majority of AIB coolers are oversized still.There still PowerColor Reaper/Sapphire Pulse

I guess i'll look out for those brands when the card comes out.

Gaiff

SBI’s Resident Gaslighter

This. While NVIDIA is certainly a greedy company, TSMC isn't blameless at all. They're the ones jacking up prices across the board because they're by far the best and most effective supplier. Their supply can't even meet the demand, so they ask for even more. There's a reason the US government invested billions in building that Micron plant in Idaho.This is the price that consumers pay, when there is no competition between companies.

Both Nvidia and TSMC have no proper competition, so they jacked up prices as much as their greed desired.

Last edited:

Radical_3d

Member

The TSMC situation is specially hellish. By the time the west started to think that maybe it was not a good idea to have all the chip production of the, that we use to literally everything, it was already too late.This is the price that consumers pay, when there is no competition between companies.

Both Nvidia and TSMC have no proper competition, so they jacked up prices as much as their greed desired.

SolidQ

Member

Looks fine and like reference most - https://www.powercolor.com/product-detail224.htmhey're ugly compared to the reference model and always so large while having the gall to ask more money.

Reaper and pusle usually selling at MSRP + 10-20$

winjer

Member

The TSMC situation is specially hellish. By the time the west started to think that maybe it was not a good idea to have all the chip production of the, that we use to literally everything, it was already too late.

TSMC is aligned with the US. Taiwan is dependent on the USA for their security.

And TSMC is building new Fabs in the US. There are even rumors that TSMC could buy Intel's Fabs and run them in the US.

The real problem is lack of competition.

And if TSMC gets their hands on Intel's Fabs, it will become even worse.

64gigabyteram

Reverse groomer.

I'm honestly starting to wonder what's the point of many of these discussions over semiconductors and fabsThis. While NVIDIA is certainly a greedy company, TSMC isn't blameless at all. They're the ones jacking up prices across the board because they're by far the best and most effective supplier. Their supply can't even meet the demand, so they ask for even more. There's a reason the US government invested billions in building that Micron plant in Idaho.

don't get me wrong, i'm sure they're important as they're needed to even make this tech in the first place- but don't these cards usually take like 300~ or so to manufacture on both companies ends?

If AMD wanted to they could release the 9070xt for 449 and get easy marketshare while still making OK margins. And Nvidia could drop their cards to turing pricing and still make a healthy profit on their end too.

Isn't this moreso on Nvidia/AMD than TSMC?

Not very knowledgeable on semiconductors/GPU manufacturing here which is why this is a question.

Last edited:

winjer

Member

I'm honestly starting to wonder what's the point of many of these discussions over semiconductors and fabs

don't get me wrong, i'm sure they're important as they're needed to even make this tech in the first place- but don't these cards usually take like 300~ or so to manufacture on both companies ends?

If AMD wanted to they could release the 9070xt for 449 and get easy marketshare while still making OK margins. And Nvidia could drop their cards to turing pricing and still make a healthy profit on their end too.

Isn't this moreso on Nvidia/AMD than TSMC?

Not very knowledgeable on semiconductors/GPU manufacturing here which is why this is a question.

The most expensive part in a GPU is the chip. So if wafer prices go up, that means the cost of the chips go up.

And that means the cost of the GPU has to go up.

Even if Nvidia maintained their margin of profit, they would still have to increase prices.

64gigabyteram

Reverse groomer.

I'm aware of all that. It's the margin of profit that's the issue here. I doubt that these GPUs actually cost upwards of a thousand dollars for Nvidia to make, but they still charge so much for them. Whatever TSMC charges Nvidia is probably not that crazy considering how much cheaper a lot of other general tech products are by comparison- Iphones are still 800 bucks even despite TSMCs monopoly.The most expensive part in a GPU is the chip. So if wafer prices go up, that means the cost of the chips go up.

And that means the cost of the GPU has to go up.

Even if Nvidia maintained their margin of profit, they would still have to increase prices.

FingerBang

Member

It's a bit more complicated than that. You can't look at the price of the chip AMD is paying and just assume what the margins are.I'm aware of all that. It's the margin of profit that's the issue here. I doubt that these GPUs actually cost upwards of a thousand dollars for Nvidia to make, but they still charge so much for them. Whatever TSMC charges Nvidia is probably not that crazy considering how much cheaper a lot of other general tech products are by comparison- Iphones are still 800 bucks even despite TSMCs monopoly.

On that card AMD (or Nvidia and their AIB partners) needs to account for:

The cost of the materials (card and the box it comes in)

Logistics

R&D

The alternative uses of that chip, in this case, AI

Greed is not a thing in economics. A price is a balancing act. When something is priced too low (based on the demand), the cost will go up somehow. That's how scalpers can profit on things. If something is priced too high, it will not move and sellers might drop it altogether.

A fixed MSRP is a bet, because production doesn't usually follow demand (not many companies can produce more or less based on the demand. Good luck getting more chips from TSMC tomorrow.) so some products are impossible to find at MSRP until eventually they are.

The alternative to scarcity is a higher price. Companies could do it, but would look awful on them. So they let the scalpers do "the market".

Last edited:

Sanepar

Member

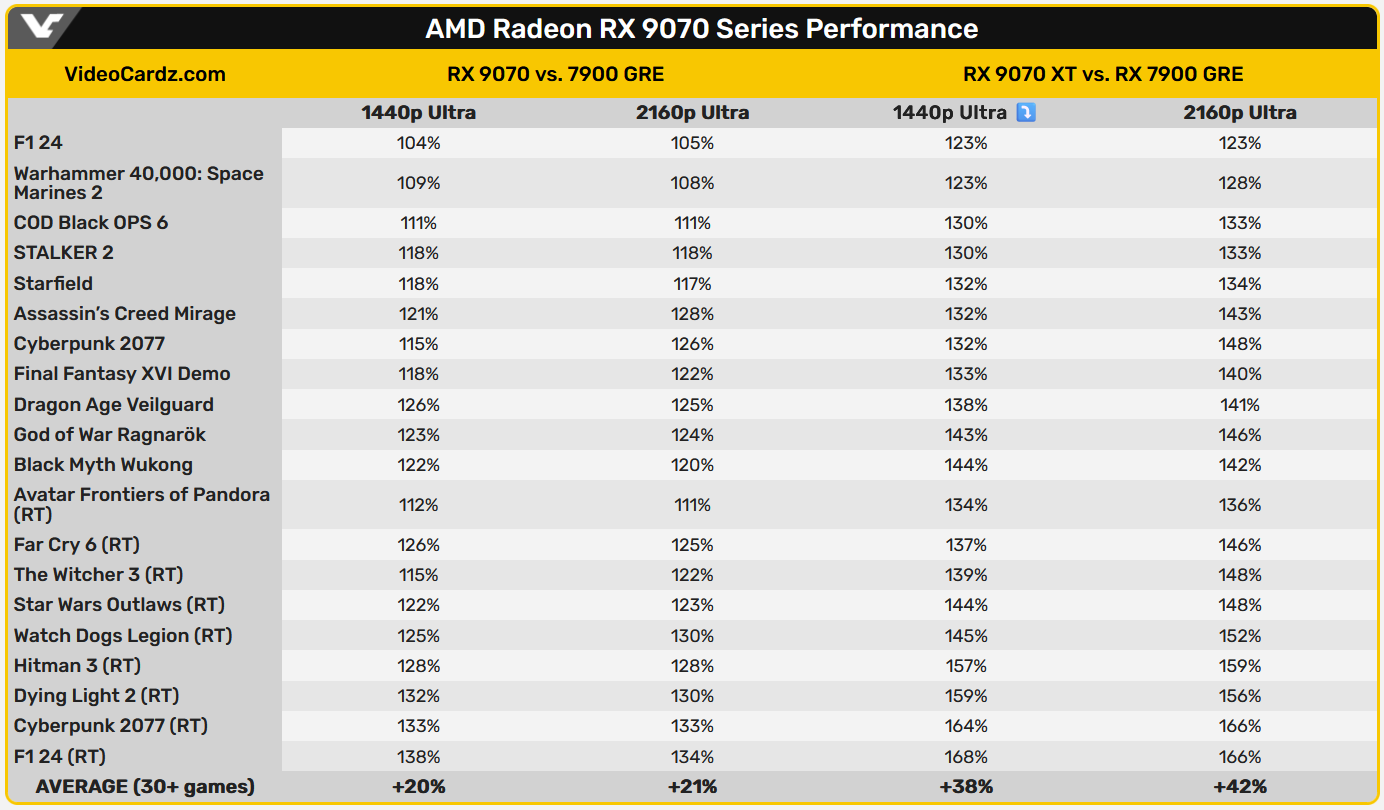

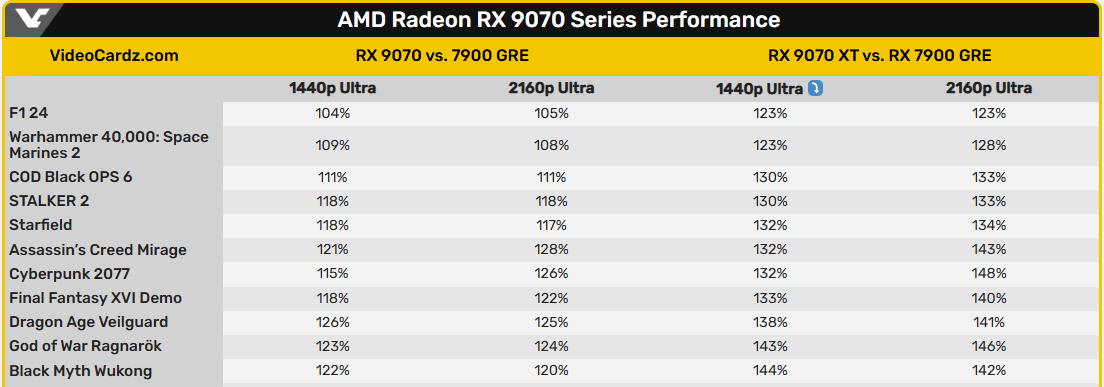

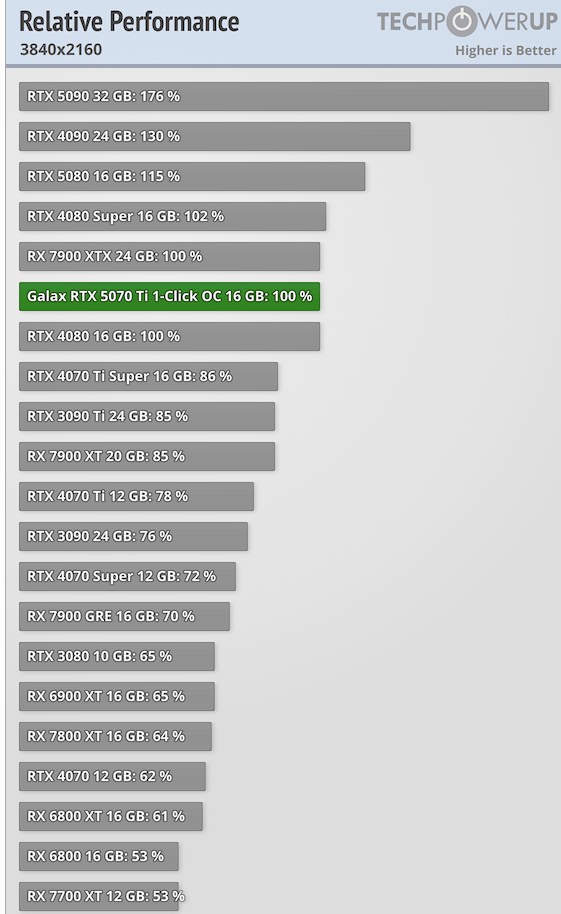

Wow this put 9070 xt on 4080 super and 7900 xtx ballpark, just 10% slower than a 5080.

TheRaidenPT

Member

As a 7900XTX I’m glad to see more people getting this performance level for cheaper.

I’m just worried about the XT price now, a lot of people I know are waiting for this card

dgrdsv

Member

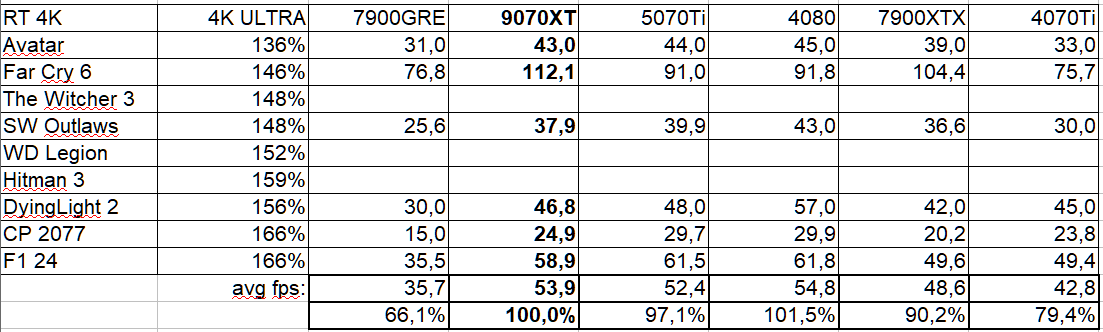

The numbers are a mix of RT and non-RT which could mean anything really as 7900XTX is below 3070 in path tracing.Wow this put 9070 xt on 4080 super and 7900 xtx ballpark, just 10% slower than a 5080.

SolidQ

Member

Comparing vs 7900GRE mean 550$, but in last seconds AMD can put 600$I’m just worried about the XT price now, a lot of people I know are waiting for this card

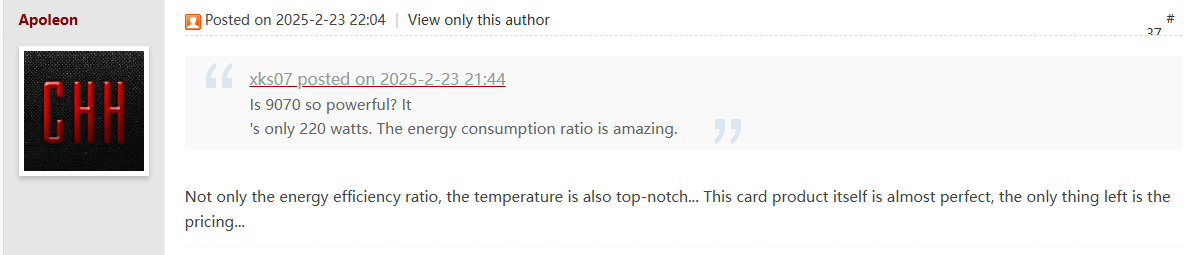

for alot people 9070nonXT(~7900XT perf) would be very nice gift for like 450$, i'ts also 220W part

RT perf is 4070S and 4070tiS respectively

owandeseis

Member

And that's not counting the big image quality gains FSR 4 will provide if we judge by that Ratchet comparison, it seemed pretty solid even in Performance mode, which was kinda unusable for FSR3.

Yea the leap on this cards from their old generation for AMD is going to be huge, enough to make them a better deal than a 5070ti? we'll see but it's looking good so far

dgrdsv

Member

Looking at the spreadsheet I would say that it should be roughly on par with 5070Ti in non-RT and some 10-20% slower on average in RT workloads.

Non-XT will likely beat 5070 in non-RT while being close to it in RT - possibly loosing in 1440p as 5070 will have some issues in 4K with RT due to its 12GBs of VRAM.

All this is kinda expected stuff though. What we still don't know anything about are the prices, and these will decide whether the new AMD cards are good or not.

Non-XT will likely beat 5070 in non-RT while being close to it in RT - possibly loosing in 1440p as 5070 will have some issues in 4K with RT due to its 12GBs of VRAM.

All this is kinda expected stuff though. What we still don't know anything about are the prices, and these will decide whether the new AMD cards are good or not.

Last edited:

SolidQ

Member

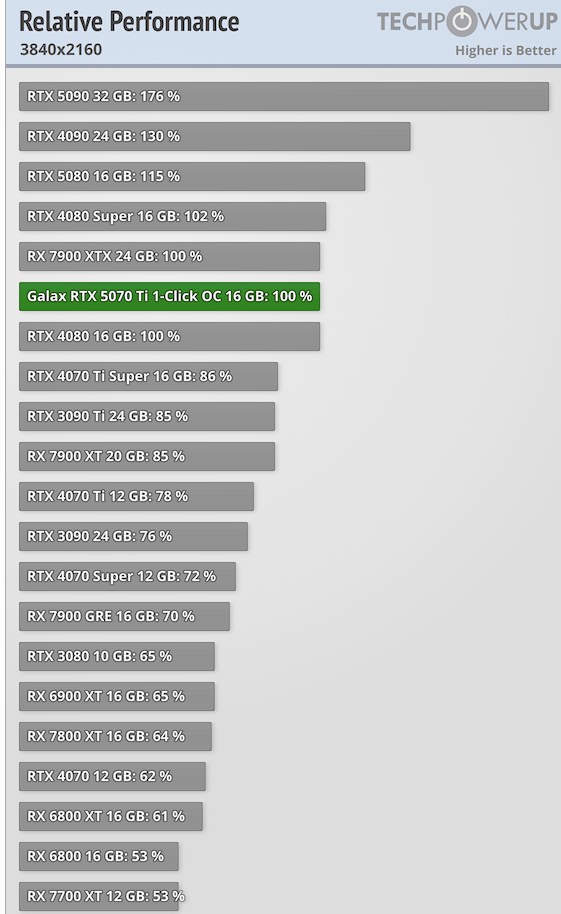

You can take just this and calculateThis is with RT games as well so pure raster should be lower.

and compare with this

i got 18-19 for nonXT and 37-38% for XT only raster.

Last edited:

Bojji

Member

You can take just this and calculate

and compare with this

i got 18-19 for nonXT and 37-38% for XT only raster.

It's 37% in raster in 4k so 7900XTX level:

But 7900GRE they used is weaker than 6800XT... AMD is not very honest.

owandeseis

Member

This is with RT games as well so pure raster should be lower. Also this comes from AMD and they are lying in their info when it comes to GPUs.

This is interesting:

They used some gimped GRE...

Trying to understand the image but i don't get it, can you explain?

SolidQ

Member

Maybe he didn't notice that 9070 nonXT vs 6800XTTrying to understand the image but i don't get it, can you explain?

6800XT vs 7900GRe is 15% on TPU, 21% + 15% = 36%, so almost same as AMD saying 38%. +- different games.

Last edited:

ThisIsMyDog

Member

if 9070 is 16GB VRAM and bellow 500$ then... thats my new GPU.

Bojji

Member

Trying to understand the image but i don't get it, can you explain?

Maybe he didn't notice that 9070 nonXT vs 6800XT

6800XT vs 7900GRe is 15% on TPU, 21% + 15% = 36%, so almost same as AMD saying 38%. +- different games.

YES, that's what AMD fucked up naming does here, I didn't notice lack of "XT" here. Sorry!

Why not name it 9080 and 9070 AMD? You copied some aspects of nvidia naming but this XT bullshit is still here.

It's 37% faster in raster so 7900XTX level, now we have to see if AMD numbers are true or not (they were lying before 7900XTX launch).

SolidQ

Member

because there no competition to 5080, but there also Kepler saying possible 3.4ghz, seems AMD left it for user OCWhy not name it 9080 and 9070 AMD?

Bojji

Member

because there no competition to 5080, but there also Kepler saying possible 3.4ghz, seems AMD left it for user OC

But they should postition this against their own GPUs and not nvidia, we already see that 9070XT is ~7900XTX level.

Last edited:

winjer

Member

Thank Lisa for canceling 144CU/550W Halo part. Worst decision in her career

The worst decision of her career was not booking a lot more wafers at TSMC, for 2020, when they had the PS5, Series S/X, RDNA2 and Zen3 launch.

So many good products, and so few wafers to go around.

And then the mining craze and Covid hit, making it even worse.

SolidQ

Member

for RDNA4 wafers not problem, even, if there Halo part.So many good products, and so few wafers to go around.

winjer

Member

for RDNA4 wafers not problem, even, if there Halo part.

That is not what I was talking about.

In 2020, AMD had a very good lineup of products. Consoles, the best CPUs in the market and competitive GPUs for price/performance.

And a ton of demand due to Covid and mining.

But AMD didn't book enough wafers for all of that, so they lost out on a ton of sales.

SolidQ

Member

We don't know what happens there. That company secretsBut AMD didn't book enough wafers for all of that, so they lost out on a ton of sales.

Bojji

Member

9070nonXT seems temp are good

someone calculate data Using TPU/Hub

How they can calculate that when no one knows how they even tested those games (and in what places)?. We know rough performance data from AMD, now we need to wait for reviews to see true performance.

SolidQ

Member

We have data from AMD. People calculate it? People can have fun until reviews.How they can calculate that when no one knows how they even tested those games (and in what places)?.

Bojji

Member

We have data from AMD. People calculate it? People can have fun until reviews.

Yeah but this is worth shit. GPUs needs to be tested in the same places using the same settings so only differences AMD showed vs. their own cards are valid (if they are not lying again).

SolidQ

Member

there no "UP to"(if they are not lying again).

Sanepar

Member

You guys really love to be scamed by nvidia.This is with RT games as well so pure raster should be lower. Also this comes from AMD and they are lying in their info when it comes to GPUs.

This is interesting:

They used some gimped GRE...

Bojji

Member

there no "UP to"

Yep, we will see how it will compare with reviewers data.

You guys really love to be scamed by nvidia.

Instead of being scammed by AMD? Their prices with 7xxx series were very bad at launch, 9070XT could be amazing product but it all depends on what REAL (in stores) price it will have.

JohnnyFootball

GerAlt-Right. Ciriously.

Not only that, they incorrectly bet/assumed that raster performance was the end all be all and allowed features like DLSS/RT/FG to be monopolized by nvidia and only now does it seem like FSR4 is where AMD is finally getting serious.That is not what I was talking about.

In 2020, AMD had a very good lineup of products. Consoles, the best CPUs in the market and competitive GPUs for price/performance.

And a ton of demand due to Covid and mining.

But AMD didn't book enough wafers for all of that, so they lost out on a ton of sales.

If FSR4 is on par with DLSS3 and this card is priced at $600 or less, then it is indeed a winner of a card.

Paltheos

Member

I'm following the developments here (a little anyway, with my very limited tech knowledge). My current rig is over 10 years old with only a mid-life cycle upgrade to a Nvidia 1080 base GPU. Any upgrade will be huge for me, but I'm not looking to break bank and I don't care about high-end performance. I brought up getting a new rig to a much more knowledgeable friend of mine who suggested waiting for the new AMD models next month. My hope is that they'll be midrange that can compete on price and availability against Nvidia's equivalent (we'll see I guess).

He told me that years ago AMD's drivers were kinda shit and so compatibility was a problem but that this has apparently improved. I'm curious if anyone with experience can testify as to their experience with newer AMD cards. My googling/chatgpting suggest AMD's drivers have gotten better for mainstream gaming and emulation.

He told me that years ago AMD's drivers were kinda shit and so compatibility was a problem but that this has apparently improved. I'm curious if anyone with experience can testify as to their experience with newer AMD cards. My googling/chatgpting suggest AMD's drivers have gotten better for mainstream gaming and emulation.

winjer

Member

Not only that, they incorrectly bet/assumed that raster performance was the end all be all and allowed features like DLSS/RT/FG to be monopolized by nvidia and only now does it seem like FSR4 is where AMD is finally getting serious.

If FSR4 is on par with DLSS3 and this card is priced at $600 or less, then it is indeed a winner of a card.

RDNA2 was a product made first and foremost for consoles. Having the RT units in the TMUS is the kind of thing that makes sense in a chip that is constrained by size, such as the SoC in a console.

Performance wasn't good, but it had the advantage of being very efficient in die usage.

Also, at the time, AI upscaling was in it's infancy. DLSS1 was a piece of crap. And the first iteration of DLSS2 only came out in 2020.

Hindsight is 20-20, and it's easy to say Radeon should have gotten sooner into AI. Especially considering they already had the units in CDNA.

Reizo Ryuu

Member

Drivers haven't been an issue for a very long time, you don't even have to reboot anymore after installing them.I'm curious if anyone with experience can testify as to their experience with newer AMD cards.

TheRaidenPT

Member

I'm using an XTX and the only problem I had recently has been issues with Delta Force which got fixed with a new driverI'm following the developments here (a little anyway, with my very limited tech knowledge). My current rig is over 10 years old with only a mid-life cycle upgrade to a Nvidia 1080 base GPU. Any upgrade will be huge for me, but I'm not looking to break bank and I don't care about high-end performance. I brought up getting a new rig to a much more knowledgeable friend of mine who suggested waiting for the new AMD models next month. My hope is that they'll be midrange that can compete on price and availability against Nvidia's equivalent (we'll see I guess).

He told me that years ago AMD's drivers were kinda shit and so compatibility was a problem but that this has apparently improved. I'm curious if anyone with experience can testify as to their experience with newer AMD cards. My googling/chatgpting suggest AMD's drivers have gotten better for mainstream gaming and emulation.

poppabk

Cheeks Spread for Digital Only Future

Except one of Nvidias 'features' is low VRAM.I can't imagine getting a card without dlss and all nvidia features...

And I used to rotate AMD/Nvidia every generation...

rofif

Can’t Git Gud

yep.Except one of Nvidias 'features' is low VRAM.

I feel like even 16gb is not enough. And all the dlss features also use vram

Last edited:

P:axMagellanic

Member

Drivers haven't been an issue for a very long time, you don't even have to reboot anymore after installing them.

Unbelievable power of nvidias marketing team. Their cards are actually cooking themselves, fire hazards, black screening and faulty dies and still it's somehow 'amd drivers'.

Unknown Soldier

Member

You act like you can just get in a line at TSMC for more wafers. TSMC gives more wafers to whoever is willing to pay more. AMD was never able to pay more than the likes of Apple, Qualcomm, and Nvidia. Their wafer allocation is in line with their ability to payThe worst decision of her career was not booking a lot more wafers at TSMC, for 2020, when they had the PS5, Series S/X, RDNA2 and Zen3 launch.

So many good products, and so few wafers to go around.

And then the mining craze and Covid hit, making it even worse.

winjer

Member

You act like you can just get in a line at TSMC for more wafers. TSMC gives more wafers to whoever is willing to pay more. AMD was never able to pay more than the likes of Apple, Qualcomm, and Nvidia. Their wafer allocation is in line with their ability to pay

Wafers at TSMC are bought several months in advance. And the same for all other companies.

The amount of left over wafers that a company can buy on short notice is very small.