LectureMaster

Gold Member

Launch together with Intel's new cards, didn't see my man

Draugoth

mention in the thread.

Draugoth

mention in the thread.

www.tweaktown.com

www.tweaktown.com

Intel XeSS, or Xe Super Sampling, is the company's answer to NVIDIA's DLSS and AMD's FSR. However, as it utilizes the AI hardware and XMX Engines found in all Arc Graphics products, it's often seen as a better solution than FSR for maintaining image quality. Alongside Intel announcing its first next-gen Battlemage GPU with the new Intel Arc B580 today, the company has also lifted the lid on Intel XeSS 2. And yes, it includes frame generation.

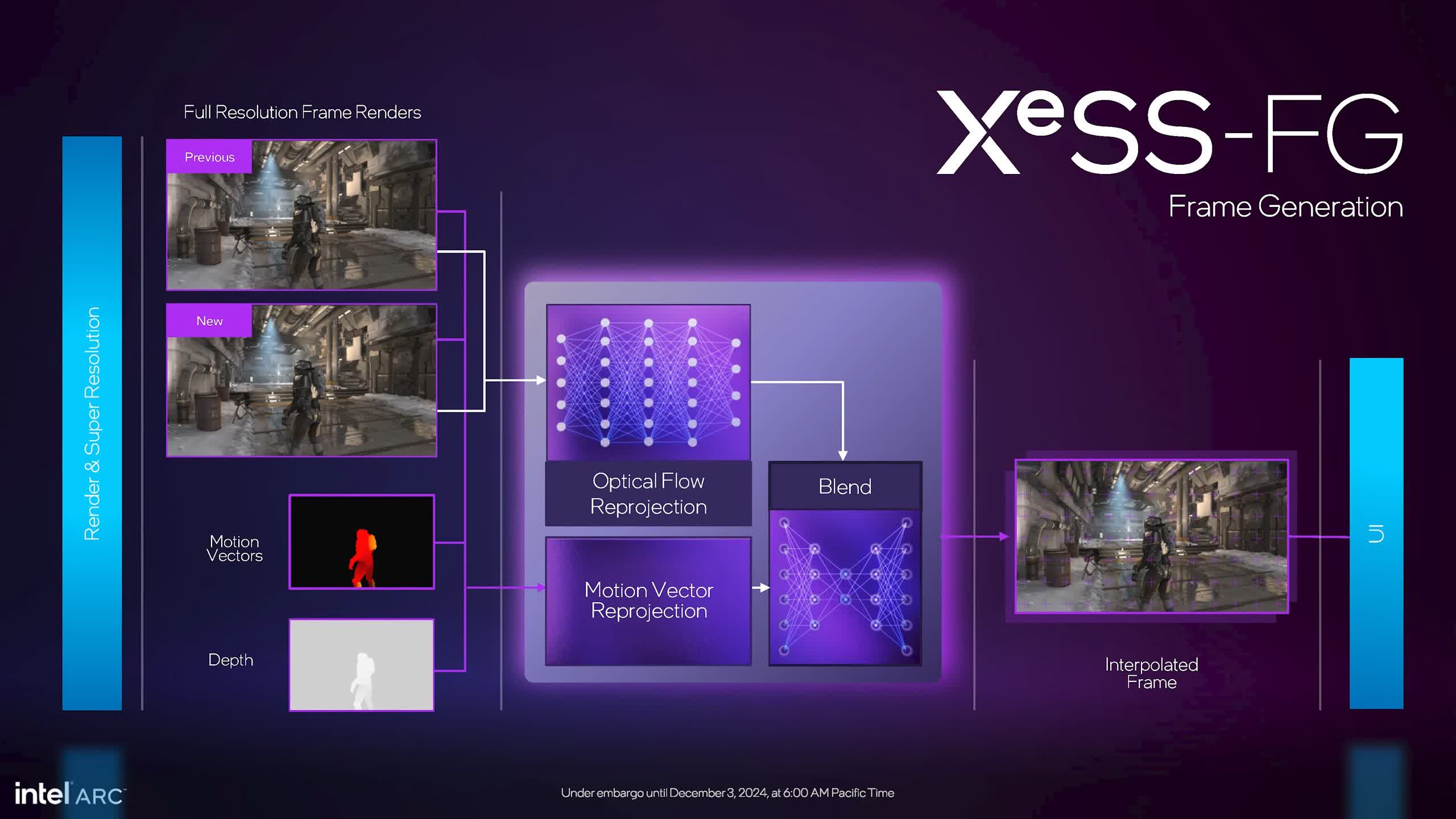

When NVIDIA launched DLSS 3 Frame Generation alongside the GeForce RTX 40 Series, it took a minute or two to understand what was happening. XeSS 2 is similar in that it combines three bits of technology - XeSS Super Resolution, XeSS-FG Frame Generation, and XeLL Low Latency. Super Resolution does the AI upscaling. Frame Generation leverages AI-powered 'Optical Flow Reprojection' and other game data to create a new frame. XeLL dramatically lowers system latency to improve responsiveness.

What does that mean? Well, it transforms F1 24's native 1440p Ultra performance on the Intel Arc B580 from 48 FPS with an overall latency of 57ms to 152 FPS with an overall latency of 28ms.

Yes, it's a game-changer, but like DLSS and XeSS, it requires game integration, so it will take a while before it becomes readily available in many games. On that note, Intel has announced the first XeSS 2 games: Assassin's Creed Shadows, Harry Potter Quidditch Champions, RoboCop Rogue City, Like a Dragon: Pirate Yakuza in Hawaii, Dying Light 2, F1 24, Ascendant, Marvel Rivals, Killing Floor III, and Citadels.

With XeSS available in over 150 games and Arc Graphics and XeSS 2 covering both the Intel Arc B-Series and Intel's mobile APUs with integrated Arc chips, there's a good chance XeSS 2 support will ramp up in the coming months.

Intel announces XeSS 2 with new Frame Generation and Low Latency tech

Intel XeSS 2 is coming, with AI-powered Frame Generation and Low Latency technology similar to NVIDIA Reflex. And it arrives alongside Battlemage.

Intel XeSS, or Xe Super Sampling, is the company's answer to NVIDIA's DLSS and AMD's FSR. However, as it utilizes the AI hardware and XMX Engines found in all Arc Graphics products, it's often seen as a better solution than FSR for maintaining image quality. Alongside Intel announcing its first next-gen Battlemage GPU with the new Intel Arc B580 today, the company has also lifted the lid on Intel XeSS 2. And yes, it includes frame generation.

When NVIDIA launched DLSS 3 Frame Generation alongside the GeForce RTX 40 Series, it took a minute or two to understand what was happening. XeSS 2 is similar in that it combines three bits of technology - XeSS Super Resolution, XeSS-FG Frame Generation, and XeLL Low Latency. Super Resolution does the AI upscaling. Frame Generation leverages AI-powered 'Optical Flow Reprojection' and other game data to create a new frame. XeLL dramatically lowers system latency to improve responsiveness.

What does that mean? Well, it transforms F1 24's native 1440p Ultra performance on the Intel Arc B580 from 48 FPS with an overall latency of 57ms to 152 FPS with an overall latency of 28ms.

Yes, it's a game-changer, but like DLSS and XeSS, it requires game integration, so it will take a while before it becomes readily available in many games. On that note, Intel has announced the first XeSS 2 games: Assassin's Creed Shadows, Harry Potter Quidditch Champions, RoboCop Rogue City, Like a Dragon: Pirate Yakuza in Hawaii, Dying Light 2, F1 24, Ascendant, Marvel Rivals, Killing Floor III, and Citadels.

With XeSS available in over 150 games and Arc Graphics and XeSS 2 covering both the Intel Arc B-Series and Intel's mobile APUs with integrated Arc chips, there's a good chance XeSS 2 support will ramp up in the coming months.