Buggy Loop

Gold Member

I didn't follow all AMD news, do they have something for CES ?

I didn't follow all AMD news, do they have something for CES ?

9070XT.

GPU rumored to be on 7900GRE level OR 5% slower than 4080.

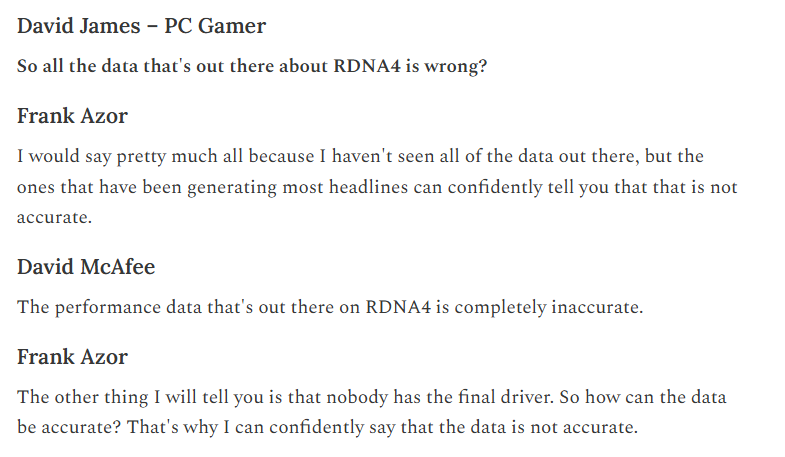

We will see but with AMD cards it's much wiser to not believe rumors suggesting too high performance.

Havent't PS5Pro taught you guys to temper your expectationsThe RX 9070 will be an intermediate card, like a 4070/5070, which has/will have 12 GB.

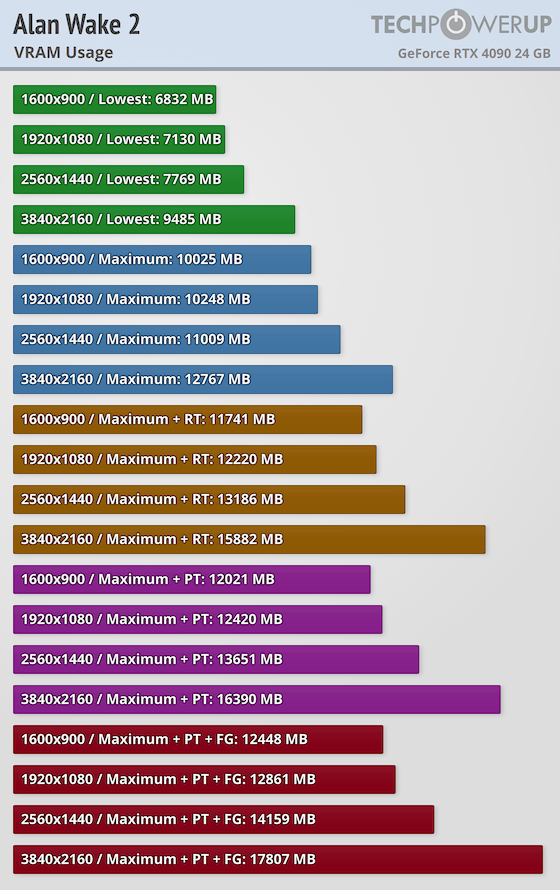

Regarding the use of VRAM, in 4K there must be at least 16GB of VRAM. In the PS6 generation, it will probably be much worse, as the console is expected to have 32 GB of RAM.

N48 have 2x16GB cards and one 12gbThe RX 9070 will be an intermediate card, like a 4070/5070, which has/will have 12 GB

it's can be 9070 is Gre + 5%, 9070XT ~4080GPU rumored to be on 7900GRE level OR 5% slower than 4080.

N48 have 2x16GB cards and one 12gb

it's can be 9070 is Gre + 5%, 9070XT ~4080

we not only timespy have, we also have rumor 4080 -5% for reference modelBased on that time spy score we have this:

we not only timespy have, we also have rumor 4080 -5% for reference model

As you see we don't have any leak about RDNA4 and RTX 5xxx series. Both companies hiding well

yeah 14 hrs leftWe will see what is the truth quite soon.

at least some slides, than nothingare some slides.

Why they didn't show this at the conference?

Most likely waiting to see what Nvidia announces and pricing to make sure they don't shoot themselves in the foot 7 hours before. Why give Nvidia the heads up?Why they didn't show this at the conference?

Most likely waiting to see what Nvidia announces and pricing to make sure they don't shoot themselves in the foot 7 hours before. Why give Nvidia the heads up?

I wasn't expecting RDNA4 cards to match the high-end RTX 50 series cards, but two years have passed since nvidia launched the RTX4080, so I thought something like the RX 9070XT would be finally able to match nvidia RTX4080 in both raster and RT.So this one tells us all. Probably somewhere between 7900GRE and 7900XT. One tier behind XTX and 4080.

I like the design, these cards look beautiful, but looks can be deceving

ASUS unveils Radeon RX 9070 XT TUF and PRIME GPUs, confirms 16GB memory - VideoCardz.com

ASUS says RDNA4 cards are ‘coming soon’, equipped with 16GB memory and phase-changing thermal pads With nearly zero information on Radeon RX 9070 series, ASUS has taken upon themselves to reveal details that AMD was not interested in sharing. ASUS has revealed two new models today: the TUF and...videocardz.com

With RDNA 4, AMD claims generational SIMD performance increase on the RDNA 4 compute units. The 2nd Gen AI accelerators will boast of generational performance increase, and AMD will debut a locally-accelerated generative AI application down the line, called the AMD Adrenalin AI, which can generate images, summarize documents, and perform some linguistic/grammar tasks (rewriting), and serve as a chatbot for answering AMD-related queries. This is basically AMD's answer to NVIDIA Chat RTX. AMD's 3rd Gen Ray accelerator is expected to reduce the performance cost of ray tracing, by putting more of the ray tracing workload through dedicated hardware, offloading the SIMD engine. Lastly, AMD is expected to significantly upgrade the media acceleration and display I/O of its GPUs.

We also got our first peek at what the "Navi 48" GPU powering the Radeon RX 9070 series looks like—it features an unusual rectangular die with a 2:1 aspect ratio, which seems to lend plausibility to the popular theory that the "Navi 48" is two "Navi 44" dies joined at the hip with full cache-coherency. The GPU is rumored to feature a 256-bit GDDR6 memory interface, and 64 compute units (4,096 stream processors). The "Navi 44," on the other hand, is exactly half of this (128-bit GDDR6, 32 CU). AMD is building the "Navi 48" and "Navi 44" on the TSMC N4P (4 nm EUV) foundry node, on which it is building pretty much its entire current-generation, from mobile processors, to CPU chiplets.

isn't AMD tease us 7900XT?If the 9070XT is going to be only as fast as 7900GRE

I thought there was something wrong with these leaks when I saw the size of these cards. These 9070XT cards are absolutely massive, comparable in size to the 7900XTX and RTX4080, and why you would need such a huge cooler for a mid-range card? The 7900GRE is relatively small compared to 9070XT.

AMD Debuts Radeon RX 9070 XT and RX 9070 Powered by RDNA 4, and FSR 4

AMD at the 2025 International CES announced the Radeon RX 9070 XT and Radeon RX 9070 desktop performance-segment graphics cards. These will be the face of AMD's next generation of gaming graphics products, and will be powered by the new RDNA 4 graphics architecture. AMD hopes to launch both...www.techpowerup.com

I thought there was something wrong with these leaks when I saw the size of these cards. These cards are absolutely massive, comparable to 7900XTX and RTX4080 cards, and you dont need so huge cooler for midrange card. The 7900GRE is relatively small compared to 9070XT.

I thought there was something wrong with these leaks when I saw the size of these cards. These 9070XT cards are absolutely massive, comparable in size to the 7900XTX and RTX4080, and why you would need such a huge cooler for a mid-range card? The 7900GRE is relatively small compared to 9070XT.

Maybe the 9070XT will be as fast as RTX4080 after all.

There will be special event for RDNA4No spec announced at all WTH?

Yeah, that's the curious bit.Although that is true, anyone considering running serious AI workloads, will go for a 4090.

I mean, isn’t this general AMD motto? Take Nvidia card pricing close in raster performance and take 10% off the MSRP?AMD still has the possibility of surprising, if it does its homework well. But that's precisely the problem, AMD always makes ugly and rude mistakes.

If the 9070 XT is capable of being at the level of the 5070, they will want to charge 499 dollars...

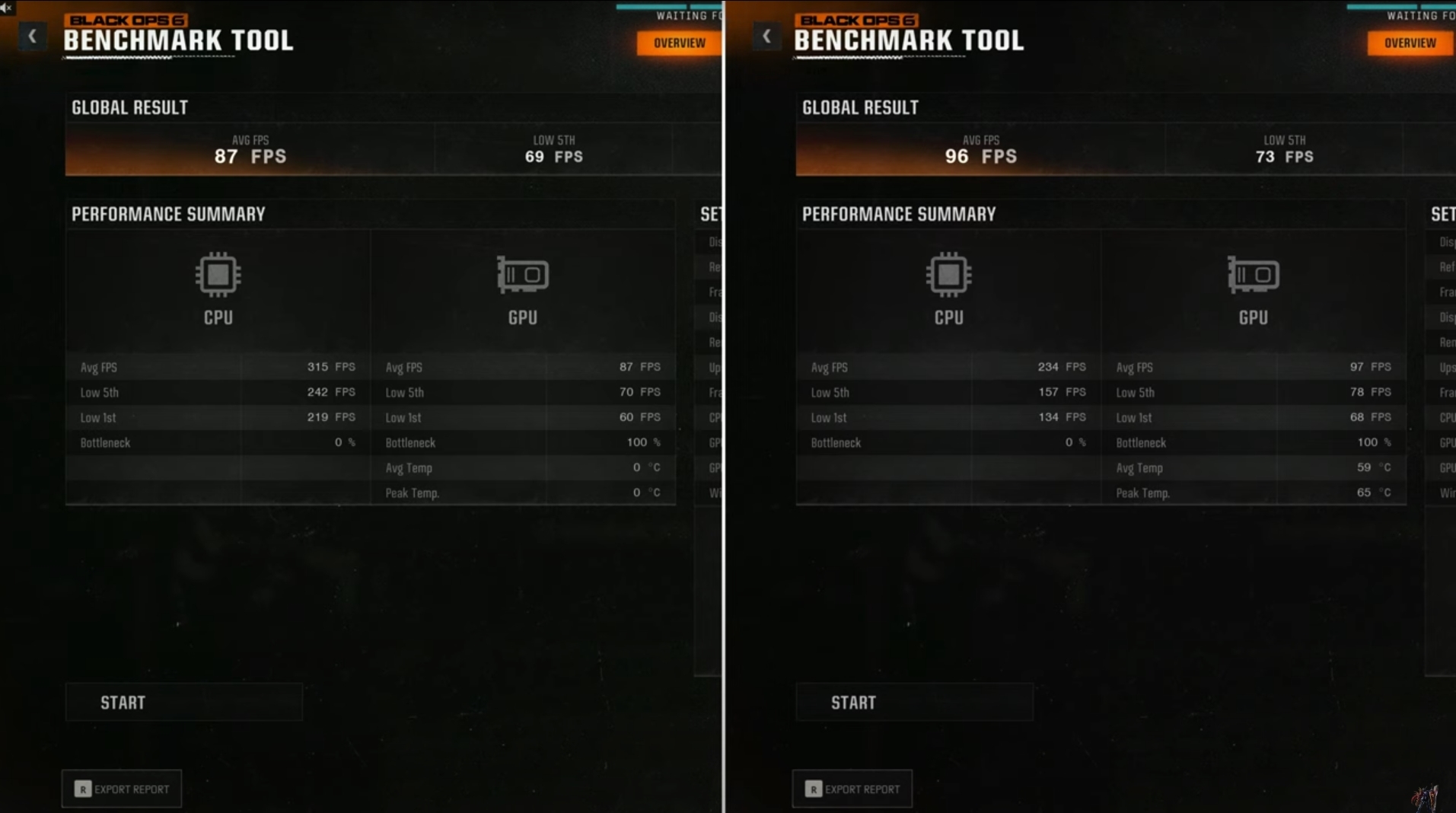

Call of Duty Black ops 6, 9070XT is Faster than 7900XTX on alpha drivers. Diff like ~15%

9070XT is 99fpsThey said ~4080 and 7900XTX is much faster than this gpu in this game.

Do you really trust that benchmark from the potatoheads at IGN?9070XT is 99fps

Same ballpark as a 7900 XTX when it comes to Time Spy Extreme and Speed Way. Even slightly faster despite a potential driver bug. That's significantly better than the 7900 XT or below rumors we had. With FSR4, this makes those cards (the 9070 XT/X at least) interesting options in the high end.

Is this the 9070 or XT/X?

I wonder how much is that GDDR6 limiting the 9070, compared to Blackwell that already has with GDDR7.

Probably not as much as you would think seen as it'll be pushing less frames

You really don't understand memory bandwidth is a result of the bus width and memory clock speed.