Oh my FG.

Upscaling is getting to higher res from lower res, regardless of what PF shills told you. (

how is 8k gaming with 3090 going, by the way?)

Upscaling is one of the steps in, cough, Stable Diffusion pipeline.

TAA is what does "it" based on "temporal data".

Nobody knows exactly what is done by AI afterwards, but most likely just denoising.

The actual AI upscaling, the DLSS 1.0, has failed miserably.

And no, neural networks do not really need "motion vectors" to function, our brain being the good example.

Yet it had failed. (things are of course more complex than they sound)

The thought that AI approach is better at any task is misinformed.

At things like denoising, though, AI should excel.

Some youtubers may refer to DLSS technology as upscaling because people tend not to care about small details. The small details however can make all the difference.

Nvidia engineers refer to DLSS as either image reconstruction or super-resolution, and given what this technology does, they are absolutely right.

In this talk, Edward Liu from NVIDIA Applied Deep Learning Research delves into the latest research progress on Deep Learning Super Sampling (DLSS), which

www.nvidia.com

Boosts performance for all GeForce RTX GPUs.

www.nvidia.com

"DLSS samples multiple lower resolution images and uses motion data and feedback from prior frames to

reconstruct native quality images."

What's interesting some DLSS buffers still run at native resolution (HUD elements for example), but of course most of the work consist of sampling multiple lower resolution images. To put it simply, DLSS is merging previous frames into a single high-quality frame.

A native 4K image has 8M of pixels, but by stitching together several 3.7M frames (DLSS Q 1440p internally), you get a much more detailed image than native 4K. You can do this process without AI (FSR2, or TSR for example) and still get very comparable results to AI-powered DLSS on the static image, but merged frames can show shimmering and other artefacts during motion. You need AI to analyse images and choose how to render merged details without artefacts.

I tried playing the RE4 remake at 2880p FSRQ, but even when downsampled to 1440p I saw very distracting shimmering, even without moving the camera, as trees and vegetation still moved on the wind and creating shimmering. I wish this game supported DLSS, but it is what it is. AMD sponsored games will not include far superior DLSS image reconstruction.

Upscaling, as the name suggests, simply increases the size of the image in the most simple way, but this process cannot add any new detail and will always degrade the image quality, because you cannot upscale pixels without losing quality due to uneven pixel scaling (but even integer scaling will ruin the image because it makes pixels look square instead of round and this will cause pixelation and aliasing). DLSS does the exact opposite, so why you want to refer to it as upscaling? That makes no sense.

Believe or not, but in some games even DLSS performance look better than native TAA. In RDR2, for example, the native TAA looks very soft, and there's even more blur when you move the camera. The DLSS built into the games is not good either, but with the updated 3.8.1 DLSS DLL file the image looks much sharper (both static and moving) even when you choose DLSS performance.

7900XTX has whopping 24GB of RAM, letting you run models that 4080 cannot.

It still costs less than 4080 where I live.

Whether 16-17% perf diff at RT gimmick justifies it... I am not sure.

It's not like market is driven by informed decisions anyhow.

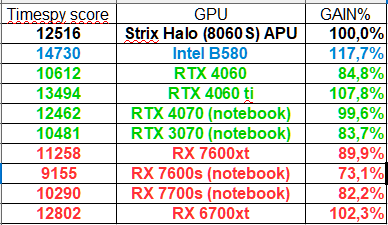

My RTX4080S has 22% faster RT on average, but in games where RT really counts (heavy RT workloads) you will often see 50% at minimum. In PT games the 7900XTX can be 3.5x slower and that's a lot. In Black Myth Wukong for example medium PT on my card at 1440p only tanks performance by 3% compared to "lumen" (I get 123fps with PT medium, and 127fps with lumen). Try to run even PT medium on AMD RX7900XTX and no amount of tweaking will give you playable results.

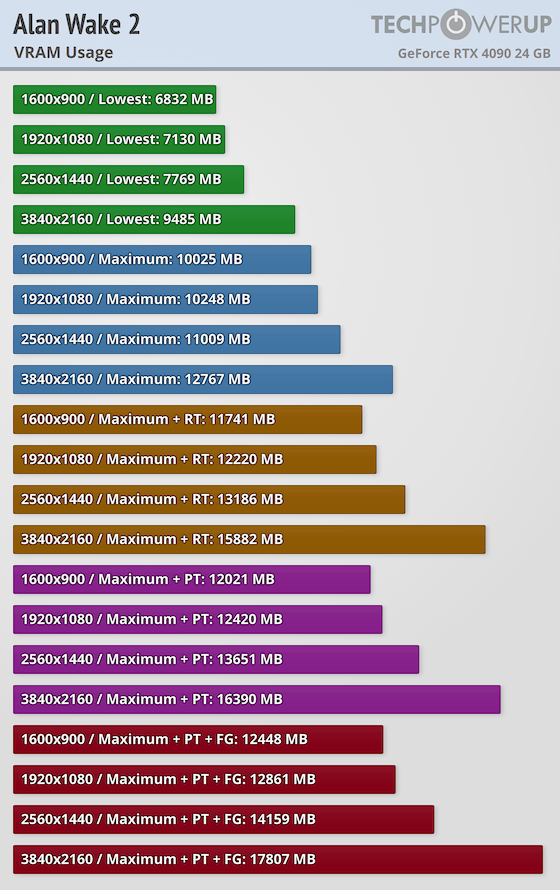

The RX7900XTX has 24GB VRAM. Of course that's more compared to the RTX4080S 16GB VRAM, but it's not something that can make a real difference in current games. Most games use 9-12GB VRAM even at 4K. There are few games that can use more than 16GB VRAM, but you need to turn on PT + FG at 4K native. My card cant even run such extremely high settings. I need to use DLSS to get smooth fps and then my card is no longer VRAM limited. AMD card has 24GB but with 4x worse performance in PT you arnt going to play the most VRAM intensive PT games anyway.

VRAM requirements will skyrocket when developers start porting PS6 games to PC, and that won't happen any time soon (2028/2029). I have heard RDNA4 RX9700 will "only" have 16GB too. It seems even AMD knows 24GB in gaming GPU is overkill at the moment. I would be worried of my card would only have 12GB VRAM (it would be still enough with some tweaks in few games), but 16GB is still plenty.

Both DLSS and FG can be considered gimmicks (very usefull, but still). RT however is not a gimmick, just the opposite. Do you even know why RT is demanding? It's because you arnt faking the lighting effects with raster gimmicks (SSR, prebaked lighting, shadow casades), yet you want to call it a gimmick.

I want to vomit every time I see AMD fans trying to suggest that RT is too demanding to be usable. On my RTX4080S RT runs surprisingly well (in some games I only saw 1fps difference between RT and OFF). I have not played a single game where turning on RT would push performance into unplayable territory. Quite a few RT games in my library runs at well over 60fps even at 4K native, and some even at well over 120fps, for example RE3 Remake runs at around 130-200fps, RE village 120-160fps. Of course the most demanding RT games (The witcher 3, cyberpunk) require the help of DLSS to get into high refreshrate territory, but I dont mind using DLSS given how well it works. I would be stupid not to use it. I always use DLSS even if I dont need more fps, because thanks to DLSS I'm able to get sharper image in every single game (DLDSR + DLSS absolutely destroy TAA native when it comes to image quality).

Before I uograded my PC I really wasnt expecting to play cyberpunk with RT at 140-170fps at 1440p egen with DLSS. Evrn at 4K thanks to I can get very smooth 100-130fps with psycho RT and 80-100fps with PT.

RT games runs great on my PC and this technology absolutely does make a difference. When I first played The Witcher 3 with RT, I was shocked at how much better it looked. Lighting with RT was no longer flat, because RT adds indirect lighting / shadows. RT reflections look much sharper and no longer fade during movement. Also, thanks to RT shadows no longer draw a few metres in front of the character. It's impossible to ignore such huge difference unless you really dont care about the graphics at all. RT is also very scalable. In some games SSR cost more than RT reflections. You need to turn every single RT effect to tank performance on my card, and even then performance is still good

.

It still costs less than 4080 where I live.

You know what's funny? Many people chose 7900XTX over 4080S simply because AMD card is $100 cheaper, but that's not the case in the long run. I saw bank4buckPCgamer YT gameplay videos and his 7900XTX at 99% GPU usage ALWAYS draws 465W. My RTX4080S draws between 260-315W depending on the game (at 99% GPU usage), so that's about 150W difference. My entire PC draws 430W at max. 150W in use for 8 hours (480 minutes) is 1.20 kWh, or 438.00 kWh per year. I have to pay 452zł for that (109 $USD at today's exchange rate). In my country, the 7900XTX would definitely end up costing more money in the long run, maybe not the first year becasue I'm not playing for 8 hours daily, but after 3 years that would be the case.